When you keep shipping the same kinds of bugs

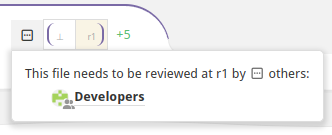

If only the right engineers reviewed the code, right?

Published: Tuesday, January 23, 2024What's the deal then? Why aren't they reviewing it properly?

We've seen this in companies where teams have started to specialize, but the tools don't help them out with it yet. If this story feels oddly familiar we're not calling you out, but you should really consider reading to the end to learn how to get your tools to help you catch mistakes and reduce the frequency of shipping bugs to production.

The rest of this post is a story about a company that kept shipping database-related issues — call them "Sweet.AI". We've seen this play out many times, just with some of the keywords swapped out.

The database kept grinding to a halt

At Sweet.AI, various product teams kept shipping code that brought the production database to its knees.

Every once in a while, someone would add a column or a table incorrectly, or change how an existing one was used without considering normal database things like indexes. This would "work on my computer" since none of the development nor staging databases had millions of rows, but the production database kept growing. As a result, every 2 to 3 months a release would get delayed and a hot fix had to be rushed out the door. If only the team in charge of scaling the databases were reviewing these migrations before they went out to customers!

Let's make sure the right people review the changes

Sweet.AI's initial approach was to "just tell everyone" to request reviews from the "infra team". One engineer took it upon himself to update our onboarding docs, and they told everyone at an all-hands meeting as well. Sure enough, that lasted all of two months before someone didn't think it was necessary for their change and shipped another high-severity bug into production.

They recognized that they had to enforce it somehow, and a couple engineers who had experience from larger tech firms suggested "code ownership" as the enforcement mechanism. This meant that any change to files in certain folders would get reviewed by certain users, and, more concretely, they would use GitHub's CODEOWNERS files to direct pull requests affecting those files to a new @database-admin GitHub team.

Once that was setup, those bugs stopped happening, hooray! However, we replaced a high-priority issue with a simmering, ostensibly low-priority bug that didn't get much attention for a while.

Who was doing these reviews?

Before we get to who, let's explain how these reviews were supposed to go. Whenever a pull request (PR) was created, the @database-admin team was assigned automatically by GitHub, which means that if you were on that team and wanted to respond to reviews immediately, you would be looking at code that wasn't ready for you. To reduce the chances of reviewing the database changes before they were finished being iterated on, the @database-admin team announced they would only look at the code after it was reviewed by at least one member of the author's own team. That way, the schema changes were done and ready to be reviewed for efficiency and correctness.

The @database-admin team consisted of 6 people, with 4 from the "cloud infrastructure" team along with 2

highly-experienced engineers from other teams. However, really only 1 person was doing the reviews, we'll call her Alice. Nobody in the team noticed. Each of the six of them all thought the other five were handling most of the PRs, until the irresponsible five grabbed lunch by chance one day and realized that, in fact, none of them had been reviewing these PRs for months. They must have been getting reviewed, the tools weren't broken after all. So who was it? Poor Alice found out later that day she was bearing the brunt of these reviews.

This had happened because the rest of the team members weren't as responsive in checking these PRs as Alice was. Other engineers learned to just assign Alice directly to get their migrations reviewed, creating a feedback loop, so she ended up reviewing 90% of these PRs.

Retrospective

Looking back at that incident, and all the other teams who relied on CODEOWNERS but ended up with one or two engineers carrying the burden for the entire team or company, there were a few causes:

- Authors had no easy insight into who could review their PR.

- The GitHub team was its own entity that would only get replaced when someone from that team approved the PR. This meant once they received a review, they remembered that username and could easily stick to them from then on.

- Reviewers had no easy way to know whether they should review a particular PR at that moment or if they should wait.

- Were they assigned directly or as part of the

@database-adminteam? - How many files needed their review? If it's only a few they could sneak it in between meetings.

- If they already left comments, were they all replied to or just some? Or none?

- Were they assigned directly or as part of the

Reviewers also couldn't create useful dashboards from GitHub's PR search results. Therefore, they usually wouldn't review a given PR until they were messaged (or "pinged") by the author. This became the code review culture for this GitHub team, as well as others that Sweet.AI created over the years.

Once an engineer reviewed a PR quickly, in response to a message or not, they gained a reputation among the author as well as other reviewers on the PR. Over time, this snowballed until they were picked almost exclusively by PR authors.

Solutions

If you're shipping the same types of bugs into production over and over again, you should definitely be using CODEOWNERS to assign your reviews. This isn't a cautionary tale about CODEOWNERS; rather, we're just explaining that it's not sufficient on its own.

Using Reviewable, we can solve both of the issues above:

- Letting authors pick their reviewers only works on smaller teams. Pretty quickly, you need something to designate reviewers automatically for you. This can be done through our Custom Acceptance Criteria feature, and more advanced use cases can use our designated reviewers feature. For example, Sweet.AI could have assigned a member of the author's team to review all files, and a member of

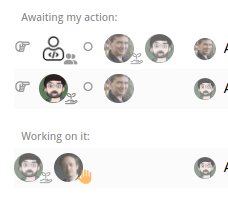

@database-adminto review the database schema files for a newdatabasescope. - The Reviewable dashboard shows reviewers at-a-glance exactly which PRs are assigned to them directly or through a team, how many files and comments to review, if the author has replied to all or some of their comments, and if the PR is blocked on them or not. Using this dashboard, our Slack integration, or our generic webhook integration, engineers can be aware of PRs that match the criteria they care about and be interrupted exactly when they need to be — no more, no less.

We also have builtin support for CODEOWNERS , if you don't want to automate assigning reviewers yet. With or without designating reviewers, your reviewers will still benefit from the Reviewable dashboard, and they'll also see which files they need to review and for which scope. Unlike GitHub, when you're reviewing a PR in Reviewable, you're not in the dark as to why.